Differential Privacy: A way to balance privacy and data analysis

14. May 2018

The latest scandal surrounding Cambridge Analytica has put the Internet giant Facebook into the biggest crisis in its history. And yet this is just one prominent example of the misuse of sensitive data. No wonder people are increasingly concerned about how and by whom their personal information is used.

“The loss of privacy is the ‘global warming’ of the information age.”

If data is the oil of the information age, then the loss of data privacy might be its “global warming”. While some deny that there is a loss of data privacy, others see no connection between recent scandals. Others are seriously afraid of the consequences. If nothing is done, Facebook and the data analysis community face a backlash of strict regulations. These could seriously threaten their business model and their future – just as automobile manufacturers are regulated by emission standards or the tobacco industry by advertising restrictions.

But there are already solutions that can help to exploit the potential of data analysis while preserving privacy. One way to deal responsibly with individual information is Differential Privacy. Simply speaking, it means to add “noise” to a data set so that the privacy of the individual is protected. However, the overall analysis remains meaningful and delivers high-quality results.

How Differential Privacy works

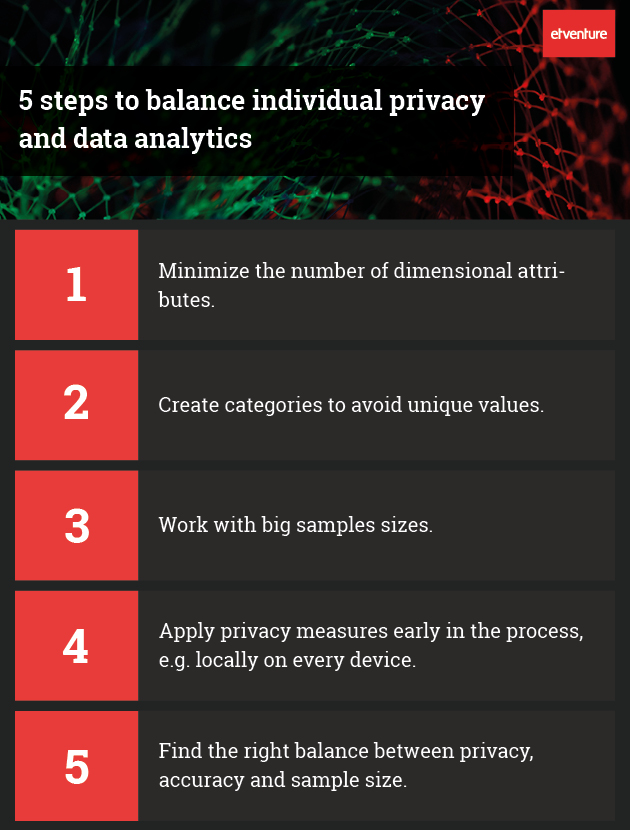

Records can not be anonymized by simply replacing names with IDs or other placeholders. Especially with data with many attributes or many unique values, individuals are easy to identify. This should have been known by 2006 at the latest when AOL published “anonymized” data from its search engine. Nevertheless, the misbelief is still widely spread today.

A common example to illustrate Differential Privacy is based on randomized response techniques from the 1960s. To collect honest answers to sensitive private questions within interviews, respondents are given a credible way to deny their individual answer at a later point in time. For example, the respondent flips a coin before answering the question. If it shows “head”, he or she is supposed to answer the question with “yes” regardless of the truth. In the case of “tail”, the respondent shall answer truthfully. Therefore, the interviewer can expect one half of the “yes”-answers to be forced by the coin flip and the other half to reflect the truth. As a consequence, the percentage of “no”-answers observed is only half of the percentage of the truth. Because the coin flip is performed by the respondent in secret, her/his individual “yes”-answer may have been forced by the coin toss. Therefore, participants retain their privacy, since they later can deny that they had answered honestly.

A common method of adding “noise” to a non-category data set is to use a symmetric distribution, such as Laplace or normal distribution. The level of noise added provides a data analyst with an amount of “Privacy Budget” to perform analytical tasks with a specific data set. By measuring and controlling this budget individual privacy can be preserved. At the same time, explanatory power of the overall data set is not harmed.

Real-world applications

Differential Privacy is already used in many analytics projects today. Apple in particular encourages intensive use in its products. What users enter on their iPhone keyboards or which websites they visit are highly critical information. Apple has created an infrastructure to respond to specific analytical use cases without compromising the privacy of users. Whether it’s which websites consume the most energy or which emojis are the most popular, differential privacy methods are applied before the data leaves the individual device. Identifiers and timestamps are removed, the order of events collected is shuffled and randomized data is added. In Apple’s cloud, information from numerous end devices is collected and a further layer of differential privacy tasks is applied.

Data analysis at the crossroads

Data protection and privacy are a matter of urgency. How information is handled by individuals will determine whether the current extensive freedom of movement in data analysis will last. This can have fatal consequences for the business models of leading Internet and technology groups.

If the data analysis community fully exploits its “privacy budget”, the public will soon see more risks than opportunities in technology. Proven methods such as Differential Privacy can help to restore a healthy balance between the protection of privacy and the need for statistical accuracy. It is therefore obvious that Differential Privacy will become a common tool for every data analyst in the near future.

* Required field